For nearly a decade now, using a cloud infrastructure provider like Amazon (AWS), Azure or Google (GCP) to host enterprise applications has been a no-brainer – at least for those organisations who understand the business (aka financial) value of the cloud.

However, the story doesn’t simply end when you migrate from on-premise to the cloud. In fact, this is where the twist begins! After you choose your preferred cloud provider and move to the cloud, you may face a few challenges that may hamper your cloud migration goals. It’s important to be aware of these challenges so you can take the right steps to overcome them and extract maximum value from your cloud investment.

One such huge challenge is how to deliberately and systematically move away from the archaic methods of deployment and scaling that you’re used to, in order to embrace a more “containerised” development paradigm. Organizations are made of people, and people usually don’t like change.

The general thinking is: if it ain’t broke, why fix it? Unfortunately, this kind of organisational thinking is counter-productive, and even downright harmful. In this case, adhering to old-fashioned deployment methods – methods that don’t sync with the fresh approach that cloud computing (justifiably) calls for – can cause a lot of damage to your organisation’s technology stack, and to your enterprise in general.

We’re not going to lie. There is a decent learning curve involved in every transition project. This is especially true for humble organisations or small teams that lack the necessary bandwidth, knowledge and expertise to set up a sophisticated CI/CD pipeline in order to minimise many of the manual tasks involved in the endeavour to transition.

Nium prides itself on being a highly innovative, globally renowned firm that’s been on the cutting edge of FinTech advancements for a number of years. But in the past, we too were in a similar situation – not only because of the reasons mentioned above, but also because the legacy software in question was the primary business software for the organisation.

According to several war stories (that have now become legendary at Nium), the original code for this software was written with a 3 to 4-month launch deadline in mind. Such a tightly squeezed timeframe did not allow for any future considerations with respect to scaling the software. As a result, very little attention was paid to key aspects like abstraction, modularity, integration and unit testing.

Due to the lack of sufficient integration and unit testing, predicting the consequences of any refactoring was next to impossible. Still, we were determined to adopt a microservices-based, container-driven approach to software delivery. This is because we realised that the potential benefits of the new container workflow – greater efficiency and portability, easier maintainability, and of course, greater scalability – far outweighed the potential challenges involved in restructuring our organisation to mirror this new workflow’s processes.

In order to achieve our aims, we followed the systematic and proven 12-factor methodology for building modern, scalable and maintainable software-as-a-service apps. This approach enabled us to gradually refactor the parts that were necessary to move to a new containerized environment for software delivery.

This approach, which involves everything from codebase, dependencies and processes, to backing services, disposability and logs, provided the means for us to minimise divergence between development and production. It enabled continuous deployment for greater agility, and also supported scalability without requiring significant changes to tooling, architecture, or development practices.

We also love that this approach offers enhanced portability between execution environments, which was one of our key objectives for this transition.

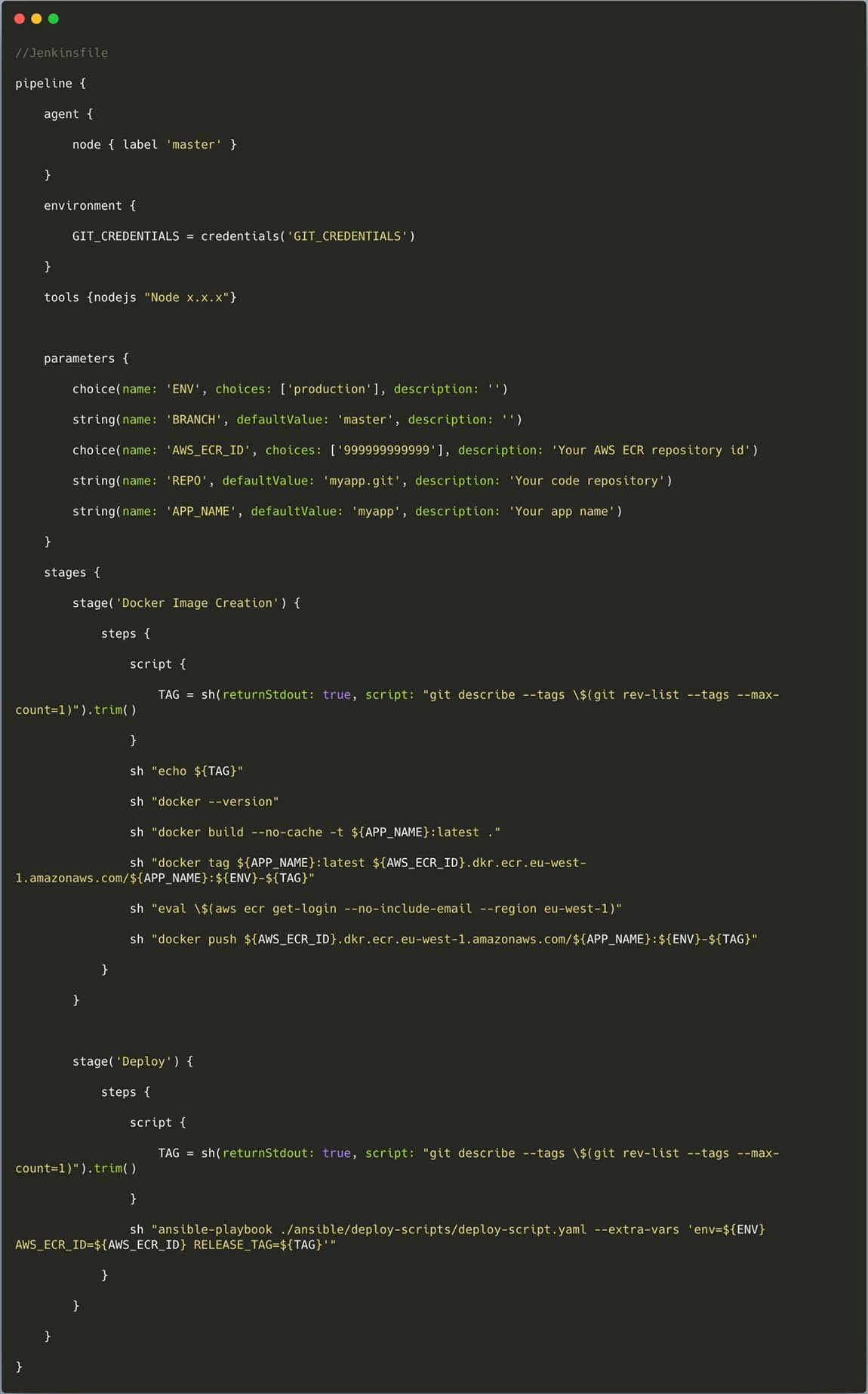

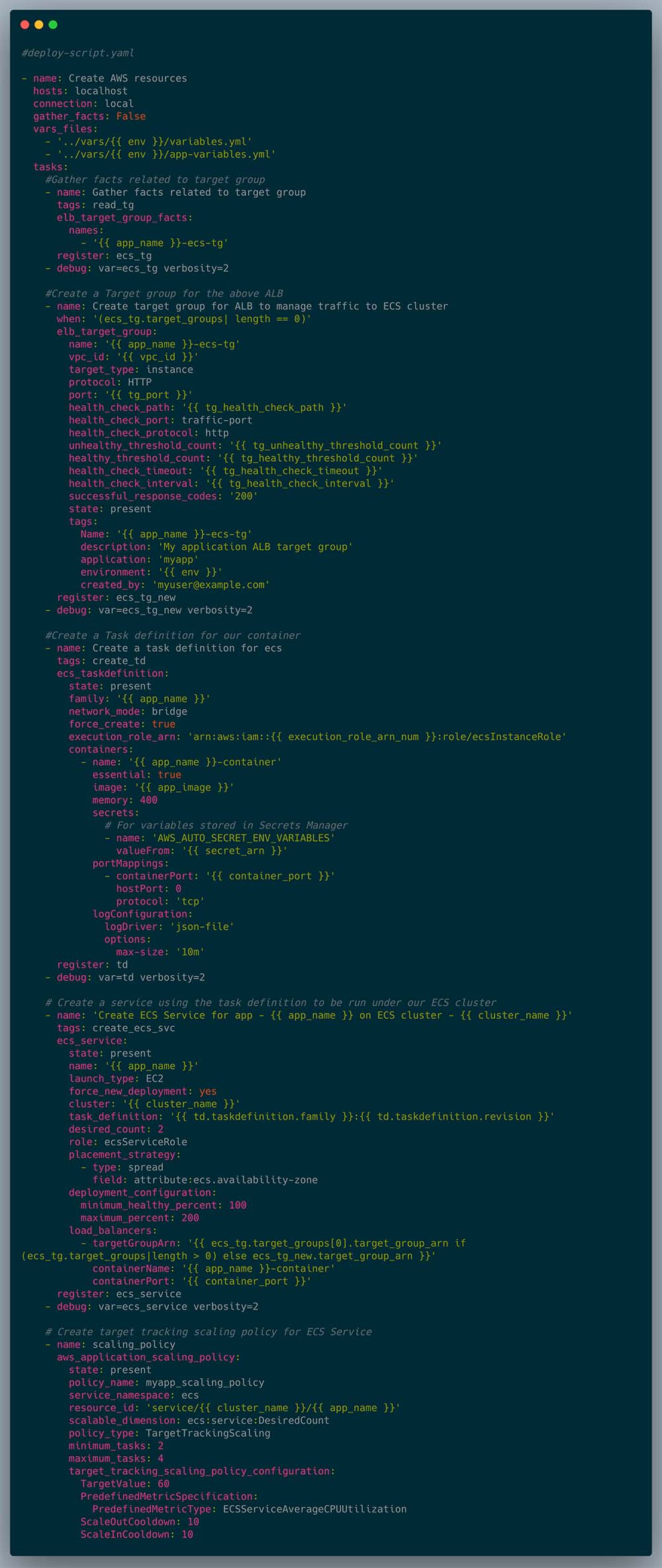

In order to manage and complete our transition on time and with minimal disruptions to business continuity, we used the following technologies for bundling, publishing, provisioning and deploying, respectively:

- Docker: To package applications with the environment and all dependencies into a “box”, called a container

- AWS ECR (Elastic Container Registry): To store, manage, share, and deploy our container images and artefacts

- AWS ECS (Elastic Container Service): To run sensitive and mission-critical applications

- Red Hat Ansible: To automate apps, and to simplify application deployment, intraservice orchestration, configuration management, and provisioning

While we could delve into the details of how the above file works, the same has been very beautifully captured here.

First, we created a new directory where all the files would live. In this directory, we created a package.json file to describe our app and its dependencies. With this new file, we ran npm install, and created a server.js file to define a web app using the Express.js framework. Then we defined the command to run our app using CMD which defined runtime. Along with this Dockerfile, we also created a .dockerignore file in order to prevent local modules and debug logs from being copied onto our Docker image, and possibly overwriting modules installed within it.

Now, if you’re wondering which process manager (for NodeJS) we used to run our enterprise software in the production environment, before we moved to containers – it was PM2 running directly on EC2!

Nium’s leadership depends on our development team to speed up the delivery of new functionalities to various lines of business. But in the past, we found that the actual deployment of new or enhanced application code did not always keep up with the speeds demanded by the business. In addition, infrastructure challenges, as well as challenges around compute resource utilisation, code/platform/cloud portability, and application security, were also crippling our development and delivery. But by adopting a containerised approach, we realised greater efficiency, more optimal resource utilisation, as well as more agile integration with our existing DevOps environment.

Equally important, we experienced greater speeds in the delivery of enhancements, as well as faster app start-up, and easier scaling. For Nium, modernisation through containerisation has definitely improved application lifecycle management through CI/CD. We hope it delivers the same benefits for your organisation!

There you have it! This is the systematic process we leveraged to successfully migrate from legacy software to a more flexible, agile, efficient and cost-effective containerised environment. Now by no means are we suggesting that this is the only solution to realise a transition to containerisation. However, it worked well for us, which is why we believe that it is a great first step to realise an agile CI/CD pipeline that would lead to developer delight – not to mention organisational success.e not yet transitioned their legacy software, applications or processes into the containerised paradigm.

Contributed by Nium

Updated on 6th March 2021